An Unexpected Goodbye

Over the holidays I felt sad realizing I had likely made my last project without the help of AI.

When I was an undergraduate student at UCLA’s Design Media Arts program and a master’s candidate at Paris College of Art, there were several common ideas that were presented to me. Of the these ideas, one has resurfaced in the wake of the AI wave: materiality. Let me explain. Throughout the creative process, an artist is presented with choices. The tools and materials you choose to realize the work can support or damage your concept for the piece. In the realm of software, these choices can be aesthetic. For instance, in Patatap everything drawn on screen is made with vector graphics.1 This provides a smooth and crisp look. The choices can also be about efficiency. In the VR music video What You Don’t Know, I needed to ground the user in three-dimensional space.2 I chose to do this with a particle system (seen in the video below as the little white specs in the center of the interface). The system ended up being a custom component that leveraged on-device graphics cards to render thousands of snow flakes in a single draw call making it possible to run the music video on low-powered but cheaper and more accessible devices like Oculus Go.3 Lastly, the choices can be about process. When I was learning Processing under Casey Reas, I made a software sketch about a recurring dream I had where I was falling from the sky.4 In it, I held a stick in each hand to direct where I was falling towards. This was something extremely personal. As such, I decided to hand draw all the assets myself. The do-it-yourself process, reinforced the authenticity of just how personal the sketch was. These are just a few examples of choices I made. Every project has a lot of choices, different kinds of choices. Projects demand you make sound decisions. What is all too easy with agentic workflows in AI is to offload choices. Let the AI choose. Today I will share how agentic workflows have seeped into my process.

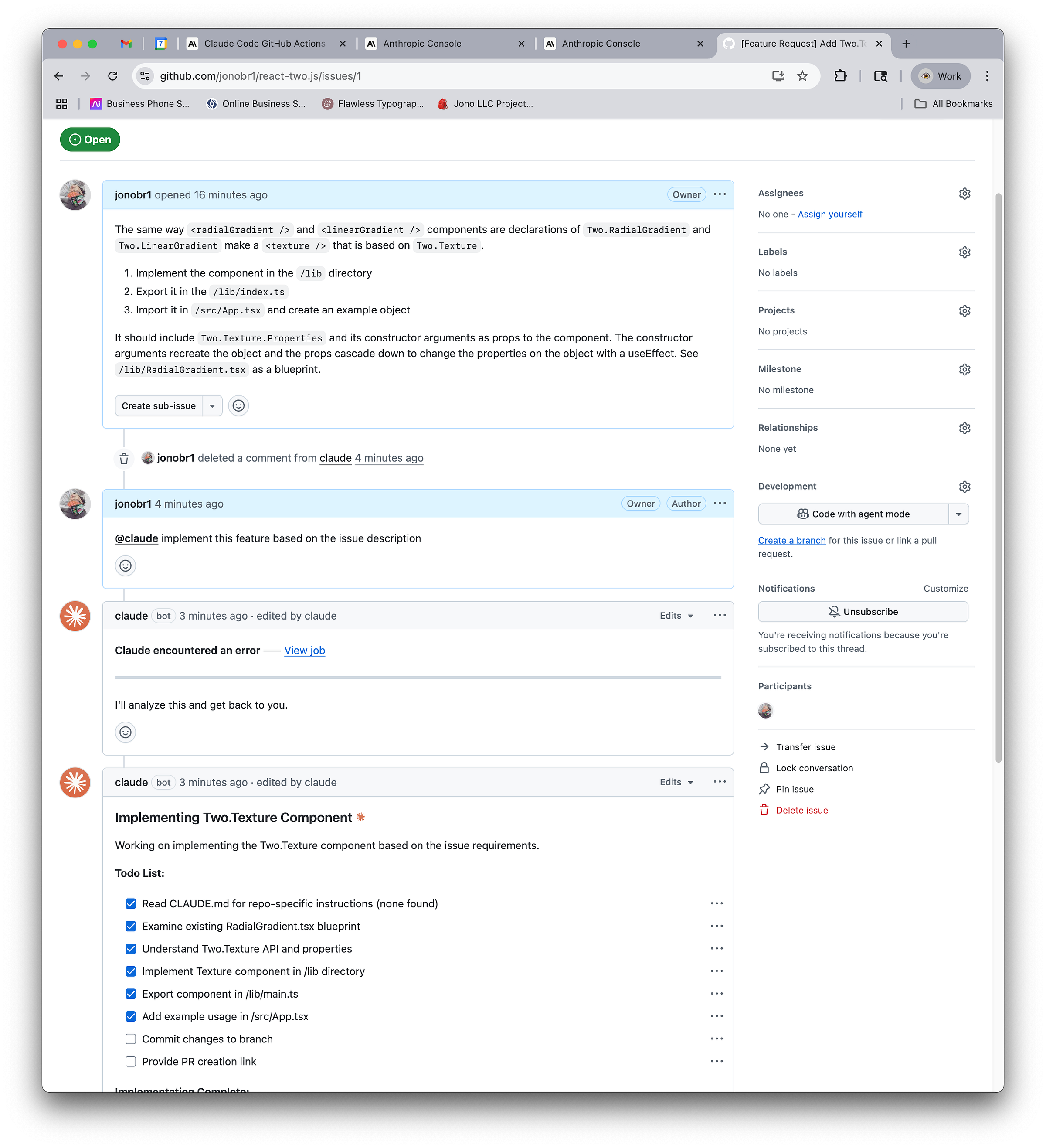

About this time last year, I started using Large Language Models (LLMs) like ChatGPT and Claude regularly for work. As a freelance type of collaborator, I found myself often in scenarios where I needed to get something done that was outside of my zone of expertise. I remember I used LLMs to build out header authentication for the microservices that support the Living Encyclopedia';5 definitely outside of my zone. This kind of need is too small to hire another person to help me out. In spring, I started using LLMs to help game plan projects: both client and personal ones. At the time, it felt like a kind of smart notebook. I could keep a tally of all my creative inquiries and have the LLM follow up on preliminary research for it. In the summer, I integrated LLMs into some of my source code repositories. This allowed me to asynchronously delegate tasks (particularly code related issues) to an LLM to do that I could then review. See the screenshot below. In autumn, I had LLMs build out end-to-end product roadmaps and then execute them. Again, the Living Encyclopedia became a testbed to develop a custom chat interface based on Matrix Chat’s protocol.6 Today, I use LLMs to offload a lot of work that I would have otherwise done myself. Tasks that would have taken a long time to do. AI is in my editors, connected to repositories, installed in my terminals, and I have AI apps downloaded to converse, search, and research for me. In all, I have spent north of $1,000 in the last year on various AI services and products. I have subscriptions and pay-per-use token charges by programmatically accessing AI services. They are mostly LLMs, but also educational material on how to prompt, and generative AI or GenAI services to produce images, videos, 3d models, audio, design artifacts, etc.. The landscape moves fast. And based on the initial boost I got last year, I have been motivated to try out services as they come out. I am constantly assessing whether a product or service improves or expands my workflow.

In a way, it is exciting. But, over the holidays it dawned on me. An unreleased client project I completed early last year is probably the last project I will ever write by hand again. It left me feeling nostalgic and sad. The way I had been working for the last twenty years had not changed that drastically. Then all of a sudden, that way of working ended. Without realizing it, I had offloaded a lot of choices. Were they artistic or creative choices? Or were they more minute and mundane than that? Is that even possible? Does it even matter? Ultimately very few people see or even care about the code.

In the New Dark Age, the author James Bridle describes a term in media studies and philosophy called the hyperobject.7 It is a system (technological or environmental) that is so complex a human cannot truly understand it or predict it. Think of a social media engagement algorithm or the weather or an electron. AI is one of these hyperobjects. And I have the AI make choices on my behalf. I am not sure it supports or damages my concept. I am not even sure I could explain how it could. It is so complex.

–Jono

Not always sure I understand your writings but I'm always impressed by the depths that you go through to explain the process and goals of your journey.